LLMs suffer from an obvious AI-sounding tone by default.

Large Language Models (LLMs) like OpenAI’s GPT-4 and Google’s Gemini deliver output in an out-of-the-box “machine-sounding” manner that users and observers are actively trying to stamp out. Downstream generative AI (genAI) providers like Jasper and Copy.AI employ various ways to circumvent this issue, resulting in equally varied levels of quality.

The problem is twofold.

First and foremost: human readers are starting to feel the ick — given the proliferation of AI-generated content online, a phenomenon akin to banner blindness is currently developing. Anything “AI-sounding” is suspect.

When trust is a brand’s currency online, this is a huge red flag.

Second: it’s anyone’s guess how the infamous “AI tone” will impact Search Engine Results Pages (SERPs) at a technical level. What’s clear though is that Google’s E-E-A-T guidelines implicitly cover trash AI-generated content by virtue of its criteria. This rolls back into the first problem: human readers are starting to become really averse to AI-sounding content and genuinely sick of AI-generated superficiality.

Of course, the problem with “thin” content has been around forever. GenAI just exacerbated the issue given mass production of useless content just inch-deep in value.

Wrangling Quality through Prompt Engineering

Since human language is the interface of choice for genAI, the only knobs and levers we can adjust to meet higher quality standards exist through prompting. Prompt design and prompt engineering might be divisive terms, but they’re technically the right ones to use here.

Simply put: one method of improving genAI output quality is providing detailed guidelines to serve as guardrails. For free LLMs like ChatGPT, users can input them as part of the prompt. For paid uses via API or provided user interfaces (e.g. the OpenAI Playground or Assistants interfaces), these can be input as system instructions.

And I do mean detailed. My original writing SOP (WSOP) guidelines were over 800 words, split into two categories with multiple rules and examples each.

But aside from anecdotal evidence, I needed a more qualitative assessment of the performance of my WSOP. Not only because I wanted to be sure its improvements to GPT-4 output were consistent, but also to see if it would work the same way for a different LLM model.

Experimenting with Vanilla ChatGPT and Gemini

So I conducted an experiment exploring the impact of my WSOP. I tasked two leading models, ChatGPT (running on GPT-4) and Gemini (Gemini 1.5 Pro), with generating content across a range of software development topics.

The prompts designed in this manner:

(click to expand)

Use this writing SOP:

######

(WSOP inserted here)

######

Write 200 words or three paragraphs on: (Topic inserted here)

I left the initial instruction simple and inserted my WSOP within demarcation, which has been demonstrated to help with LLM “understanding” to some degree.

The actual topics are below (image):

(click to expand)

So I had ChatGPT and Gemini generate 200 words or three paragraphs using these topics as prompts first without modifications (i.e. without the top part of the prompt above that instructs the AI to use the WSOP). Then I started another thread and provided the same topics verbatim except with my WSOP as guidelines.

What were the Results of Prompt Engineering ChatGPT Vs Gemini?

Here are some key findings:

- AI can indeed be trained to write with style. We already know it’s possible to create AI-generated content that adheres to specific stylistic preferences. The outputs confirmed that both GPT-4 and Gemini showed the ability to adapt their writing style based on the guidelines provided.

- GPT-4 is more receptive to the WSOP ruleset. I developed the WSOP with GPT-3 and GPT-3.5, originally, so it makes sense that it works specifically for OpenAI’s model. I imagine Claude 3’s adjustments to the same WSOP ruleset will also be markedly different.

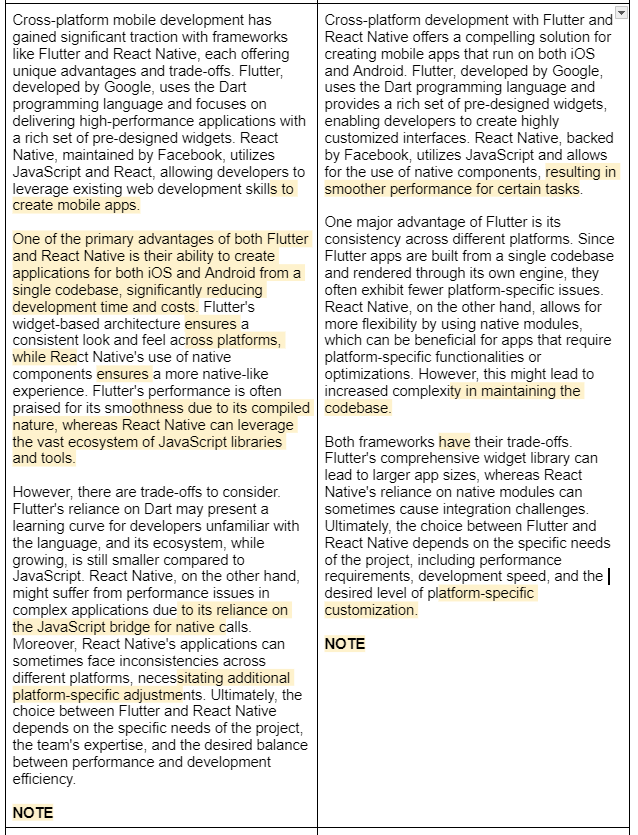

Side-by-side:

(click to expand)

Vanilla output was structurally decent, if waylaid by winding narrative and poor verbiage and phrasing in some parts.

WSOP-guided output slightly improved the already decent structure despite the need for better sentence flow and the human tendency to rely on auxiliary verbs too much.

- Gemini, in making assumptions, generally performed poorer after adopting WSOP. In three out five updated outputs, Gemini’s writing got overall worse. Refinements and improvements were present, but because of what I think was Gemini trying to extrapolate ruleset details, it made adjustments to the writing I did not expect, and output suffered as a result.

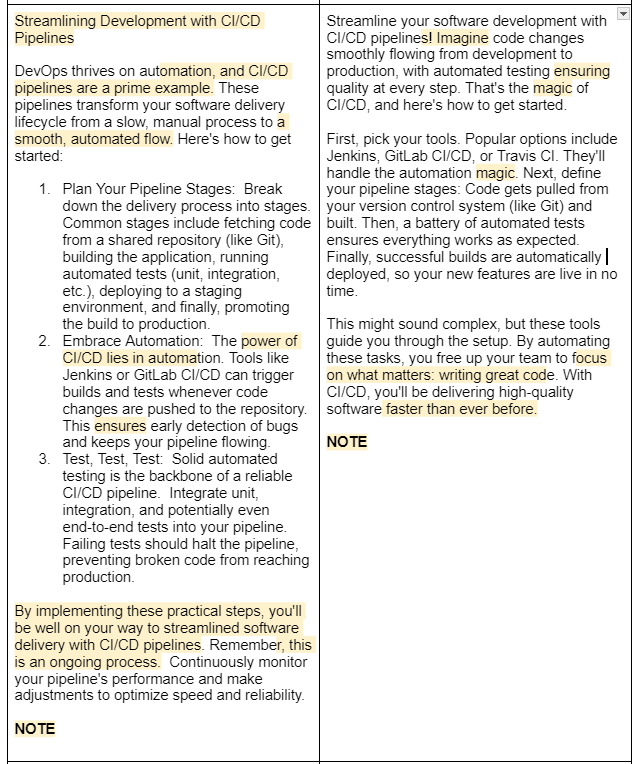

Side-by-side again:

(click to expand)

1. It removed the title. It wasn’t specified either way in the WSOP but I think in this case it’s worse off. Maybe neutral (because it was unintended).

2. It removed the numbered steps. This decreases readability / scanability.

3. It thinks exclamation points and words like “imagine” and “magic” equates to a “conversational / casual” tone, which was actually not specified explicitly in the WSOP.

Other weak points remain, like hackneyed phrases and words consistently flagged as AI favorites.

This also meant my ruleset introduced unexpected results because of inadvertent loopholes that I now had to close. A balance between clarity and subtlety is also quite powerful in influencing output, so I should also note that:

- Clear instructions are crucial. The more specific and detailed the writing guidelines, the better the AI models performed. Ambiguity or vagueness in the guidelines often led to inconsistent or undesirable outputs.

- Subtlety remains a challenge. While AI can grasp basic writing principles, it still struggles with more nuanced stylistic choices. For example, consistently maintaining a specific tone of voice or using evocative language proved to be more difficult.

Iteration is Key, so I Developed New CORE Heuristics

With this experiment and a lot of reading up on Arxiv regarding research on LLM prompting as well as User Experience (UX) for online content, I developed a CORE framework.

The CORE framework was also designed with the content pipeline in mind. It suits strategists as a guideline and writers and editors as a checklist of sorts. It also takes into consideration how a piece of content is developed — from brief to outline to writing. I took inspiration from Nielsen-Norman’s usability heuristics and applied it to written content.

The CORE heuristics provide a holistic structure, directing how every piece of content resonates with its intended audience and achieves its intended purpose. Here’s a breakdown:

C – Context:

- Relevance: The content must be pertinent to the audience’s interests, needs, or challenges. Ideal Customer Profile (ICP) or Buyer Persona objectives, pain points, and challenges can be used to inform this.

- Function: What is the content’s goal? Is it to inform, persuade, entertain, or inspire? Search intent can be used here.

- User-Focus: The content should be tailored to the target audience’s level of understanding, preferences, and communication style. The audience’s stage in their Buyer Journey or TOFU, MOFU, BOFU frameworks can be applied here.

O – Organization:

- Logical Structure: A clear outline with a logical flow of information that guarantees readability and comprehension, but also delivers marketing messaging seamlessly within the piece.

- Coherence: Smooth transitions between ideas and section, as well as messaging.

- Consistency: Maintaining a consistent tone, style, and level of detail throughout the content builds trust and credibility. As a technical aside, Co-Reference Resolution is a very interesting aspect of LLMs to look into if you’re keen on a deeper computational look at a concept that lends itself to things like pronoun antecedents in linguistics.

R – Readability:

- Clarity: Simple language, concise sentences, and active voice make the content easy to understand.

- Accuracy: Factual information, credible sources, and proper grammar and spelling enhance the content’s reliability. From a top-level view Retrieval Augmented Generation (RAG) and grounding to prevent hallucination comes into play here. But in terms of writing execution, it’s more about specificity and choosing concrete over abstract.

- Brevity: Getting to the point quickly and avoiding unnecessary jargon or wordiness keeps the reader engaged and the Hemingway App free from red highlights.

E – Engagement:

- Sentence Flow: Varying sentence length and structure creates a natural rhythm that is pleasing to read.

- Accessibility: Using accessible language and formatting elements like headings, subheadings, and bullet points improves readability and comprehension. In terms of output formatting, the key is scanability.

- Voice and Tone: Establishing a clear and consistent voice and tone helps the content connect with the audience on an emotional level.

Developing a “Writing Intuition” for AI

So with the CORE heuristics in play, what now? Well, making AI write well isn’t just about developing writing guidelines. From the perspective of a genAI-assisted content pipeline (in my view, at least), the C and O aspects of the CORE heuristics is meant for a Content Strategy AI that “orchestrates” task-specific AI — some of them focusing on outlining and some on writing.

Stanford and OpenAI experimented on a similar setup like this where a “conductor” led “expert” bots in their tasks. The method I’m developing mixes that with RAG (take a look at another similar approach here called RATT), and also a base of decision-making structures for strategic direction. This paper on AI Self-Reflection presents a good idea of what it would look like in practice.

I used the R and E aspects of the heuristics to guide the development of a new WSOP ruleset.

The idea is for genAI to rely on its own “Writing Intuition” that guides its decisions on how to write. So I developed a 17-rule set of writing intuitions divided between the concepts of Readability and Engagement, leveraging few-shot prompting and noting word choice, order, and exemplar number and specificity.

In short, I looked into how prompting techniques currently work and why, and applied it all to this new set of writing intuitions. This comprehensive meta analysis of LLM prompting was particularly helpful.

Now, the writing intuition itself won’t remarkably improve genAI prose. It needs:

- The C and O of the CORE heuristics, provided outside of the parameters of the writing-specific bot in my intended pipeline

- Well-organized and planned out structures to choose from when outlining and executing, and probably decision trees to automate decision-making (this is where the overall “strategic intuition” comes in)

- Proper research and grounding, which can be the result of RAG, AI-assisted human input, or completely manual effort

And there are already well-developed and oft-used structures in writing.

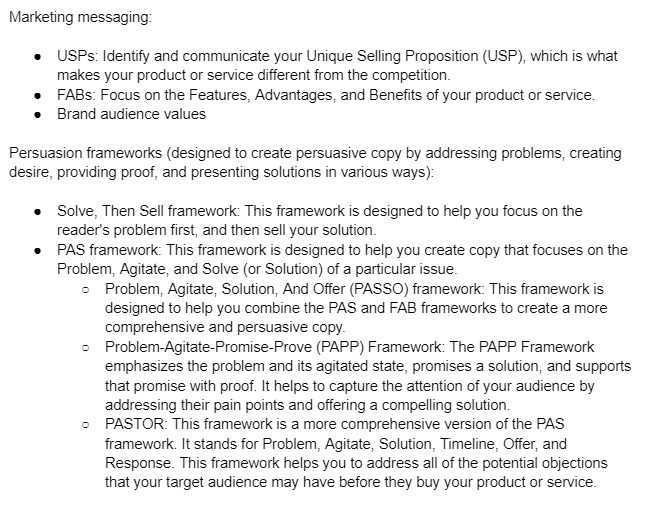

Copywriting, in particular, seems to be fond of them:

(click to expand)

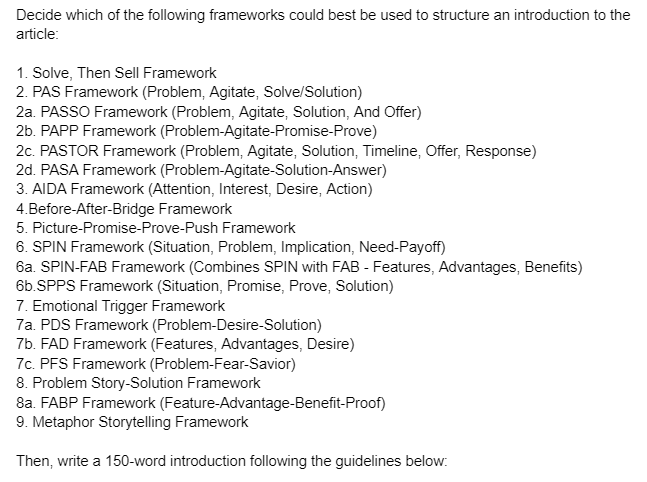

And there is a lot to try out, refine, and standardize.

For example:

(click to expand)

Ultimately, I’m trying to develop a pipeline that inherits my knowhow of content development and writing. It can then be adjusted for branded guidelines.

What’s Next for this GenAI-Assisted Pipeline?

Next is developing self-contained, reliable, and consistent modules for outlining — and if I can find a good method for automated scraping and retrieval — researching. Then putting it all together with a process orchestrated by a strategist AI with its own reasoning structures grouped into a “Content Strategy Intuition.”

For now though, it’s clear that there’s a way forward for prompt-only design and engineering to find a style that works for the user. Applying brand guidelines and samples here can easily make a bot that can churn out reliably high-quality short form content (think social media captions) — and can even choose between templates it has in its system or is referred to via RAG.

And from there? We’ll see.